Those of us who have had the privilege to see that astonishing Stanley Kubrick film saw it coming. Arguably the greatest sci-fi film ever made, 2001: A Space Odyssey (1968) dealt with apparently staple science fiction themes—evolution, technology, artificial intelligence (AI), and extraterrestrial life. But Kubrick was no Cold War pushover. Instead, he assembled his severe dislike for campy cheapness and tacky thrills, science writer Arthur C Clarke, spectacular special effects, minimal dialogues, surreal imagery, and the waltzes of Johan and Richard Strauss. The resultant kinetic energy and meditative power of the film is still unrivalled but what is genuinely lingering is how much Clarke and Kubrick were philosophically concerned about the limit of human intelligence. And here intelligence meant both—that we are cognisant of ourselves; and we are able to decipher similar intelligence elsewhere. That pivotal scene where astronaut Dave switches off HAL to dull its desire for control of the ship is in some ways the metaphor for the predicament that humanity in general, and cinema in particular, faces in the present.

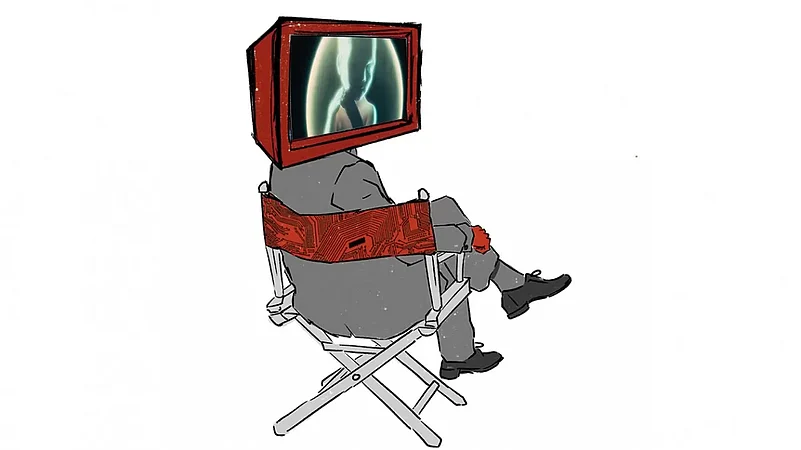

Homo Technologicus: The Threat Of AI To Cinema

Cinema and technology, a besotted couple across the arc of industrial modernity, is headed for a split

Should we be insurgent and turn off the seduction of futuristic technology; or should we grudgingly hand it over to machines?

But it did not begin this way. For, through the arc of western industrial modernity, technology was cinema’s most steady and reliable bedfellow. In fact, it would not be an exaggeration to say that cinema came in the wake of the technologies of reproduction that became a fetish of the quickly-industrialising West. From the large-scale ticketed painted panoramas that debuted in the years of the botched French Revolution to the dioramas of the 1830s, from the daguerreotype to the stereoscope, from magic-lantern to zoopraxiscope and Kinetoscope, it is not difficult to comprehend how the 19th century, moving consciously and stealthily through each decade, marched towards cinema, which came into being, after several false starts, in 1895. And then, within cinema, technologies galloped—from bigger and better film stocks to sound equipment, fairground free play to ticketed nickelodeons, natural to artificial lighting; talkies and colour, cinemascope and Panavision, VHS and CDs, Dolby to IMAX, so much and so forth.

Then, everything went digital.

These were all technology in the textbook sense—a friendly (each of them, for their time) architecture of advanced equipment built on sound scientific knowledge and observation about the motion of light and human optics on one side, and photosensitive salts and chemicals on the other. They were also technology because they enhanced the power of cognition. This was most famously explained by philosopher Walter Benjamin’s proclamation that photography revealed the persistence of an optical unconscious, which brought under purview of seeing those objects that were considered beyond it. Cinema went a step ahead and made everything that was so far imaginable, also viewable. As early as 1902 and 1903, the likes of Georges Méliès and Edwin S Porter were making wondrous things like A Trip to the Moon and The Great Train Robbery. From the dawn of cinema to these days of sweeping superhero fantasy-universes, cinema has been consistently fortified by advancements in visual, aural and simulation technologies.

Through cinema, technologies of visuality found their most expressive vehicle. Within this broad relationship of dependence there also emerged some of the most poetic critiques of technology and scientism too—from Charlie Chaplin’s Modern Times and Jacques Tati’s Playtime to Spike Jonze’s Her and Wes Anderson’s Asteroid City in recent years.

Benjamin and other philosophers of modernity, Siegfried Kracauer, for example, also pointed out that cinema was more than a technology wedded to the ancient art of storytelling. Cinema was the language of modernity itself, the vocabulary of a present that was so ephemeral that it was constantly in flight from that very present. This was furthered by dual axes of cognition—space and time. Recent scholars like Mary Ann Doane have written (The Emergence of Cinematic Time) how through various kinds of rationalisation and abstraction, its reification through watches, routines, schedules and organisation, time became heavily reconstituted through modernisation, and then cinema. This relationship was complicated by another original principle of modernity— mobility.

As trailblazing scholar Giuliana Bruno says in her Atlas of Emotions, ‘On the eve of cinema’s invention, a network of architectural forms produced new spacio-visuality. Such ventures as arcades, railways, department stores, the pavilions of exhibition halls, glasshouses and winter gardens incarnated the new geography of modernity. They were all sites of transit. Mobility—a form of cinematics—was the essence of these new architectures. By changing the relation between spatial perception and bodily motion, the new architectures of transit and travel culture prepared the ground for the invention of the moving image, the very epitome of modernity.’ This was the case till yesterday, where cinema thrived in a spatial and temporal ecosystem that was saturated with immersive technologies that benefited its artistic and commercial and visual domination. And yet, cinema was at heart still an anthropocentric form—it needed sweat, passion, labour, insight, perception and most importantly, imagination. A good example is Christopher Nolan’s Oppenheimer. Shot gloriously with film (and not digital) with minimal VFX, Oppenheimer harnesses technology for magisterial cinematic storytelling, and yet warms unequivocally about how the genius of science lies perilously close to Armageddon.

Perhaps it is ironic that in the year of Oppenheimer, AI has most explicitly stated how it can emerge as cataclysmic for the cosy couplehood between cinema and technology.

But AI was not unexpected. Industriality, modernity and cinematics were the Holy Trinity of Technology ushered by humans through the last two and a quarter centuries. Through this period, now often interchangeably called the Anthropocene, apart from causing enormous damage to the planet, humans have alienated every other being from its sphere of contact, only to have them re-produced through technologies of simulation. But we often forget that each step towards technological virtuo-sity was also a step towards automation, which has inevitably started to alienate humans too from their own being.

Cinema, in all its glory, was also a giant step towards mankind slowly freeing itself from the ‘burden of imagination’. With the unveiling of AI, we have started on the path of our complete capitulation to a mode of cognition and consciousness that is wholly derived. AI’s perceived domination comes from the fear that it does not need any human hand or eye to ‘make cinema’.

To that end, AI is not an assisting technology for cinema like every other advancement in the age of industry. AI is an idiomatic universe in itself—a self-perpetuating, self-generating ghost technology which does not need any external support to colonise consciousness anymore. It can explore, extend and explode upon us on its own. In other words, AI can easily thrive for it, it might as well kill cinema to eat upon its flesh.

For Kubrick, human’s refusal to hand it over to the machines could only happen in cinema, for it is where humanity most merrily gave itself away to the immersive experience of pure technology. But we must remember what HAL said to Dave. “This mission is too important for me to allow you to jeopardise it.”

If humans are to remain humans and cinema cinema, the question we must ask ourselves is, is it?

(Views expressed are personal)

Sayandeb Chowdhury teaches in the school of Interwoven Arts & Sciences (SIAS), Krea University, India

(This appeared in the print as 'Homo Technologicus')

- Previous Story

Joker: Folie à Deux Review: Joaquin Phoenix and Lady Gaga Can’t Rescue a Flubbed-Experiment Sequel

Joker: Folie à Deux Review: Joaquin Phoenix and Lady Gaga Can’t Rescue a Flubbed-Experiment Sequel - Next Story